Two HTTP Caching Extensions

Wednesday, 12 December 2007

We use caching extensively inside Yahoo! to improve scalability, latency and availability for back-end HTTP services, as I’ve discussed before.

However, there are a few situations where the plain vanilla HTTP caching model doesn’t quite do the trick. Rather than come up with one-off solultions to our problems, we tried going in the other direction; finding the most general solution that still met our needs, in the hopes of meeting others’ as well. Here are two of them (with specs and implementation).

stale-while-revalidate

The first problem you’ve got when you rely on HTTP caching for performance is simple — what happens when the cache is stale? If fresh responses come in a small number of milliseconds (as they usually do in a well-tuned cache), while stale ones take 200ms or more (as running code often leads to), users will notice (as will your execs).

The naïve solution is to pre-fetch things into cache before the become stale, but this leads to all sorts of problems; deciding when to pre-fetch is a major headache, and if you don’t get it right, you’ll overload your cache, the network or your back-end systems, if not all three.

A more elegant way to do this is to give the cache permission to serve slightly stale content, as long as it refreshes things in the background.

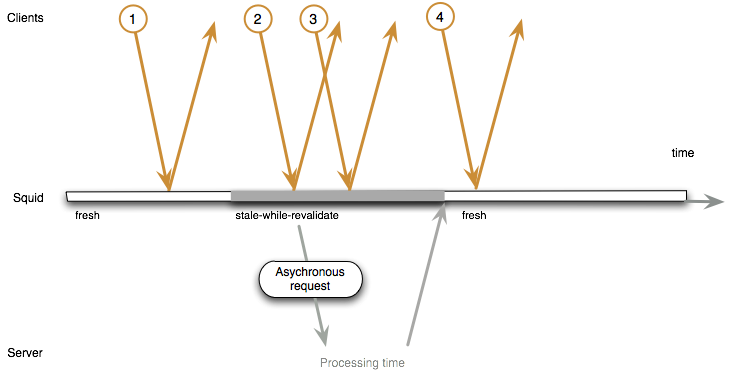

Above, request #1 is served from a fresh cache, as per normal. When the cache becomes stale and stale-while-revalidate is in effect, request #2 will kick off an asyncronous request back to the origin server, while still being served from cache as if it were still fresh (as #3 is, because it’s still inside the stale-while-revalidate “window”). Assuming that the cache is successfully updated, #4 gets served fresh from cache, because that’s what it is now.

So, in a nutshell, stale-while-revalidate hides back-end latency from your clients by taking some liberty with freshness (which you control). See the stale-while-revalidate Internet-Draft for more information.

stale-if-error

The other issue we had was when services go down. In many cases, it’s preferable not to show users a “hard” error, but instead to use slightly stale content, if it’s available. Stale-if-error allows you to do this — again, in a way that’s controllable by you.

For example, Yahoo! Tech has a number of modules on its front page that are sourced from services. If a back-end service has a glitch, in many cases it’s better to show news (for example) that’s a few minutes old, rather than have a blank space on the page. Stale-if-error makes this possible.

Again, see the stale-if-error Internet-Draft for details.

A Word About Cache-Control

People who have looked at these often comment on their requirement for a Cache-Control header; they often just want to be able to configure their cache manually, rather than go around modifying HTTP headers. In fact, we got this request from so many people, we did add this capability in implementation (see below).

That said, my preference is for the Cache-Control extensions, and I always strongly encourage people to use them. Why? Because, while it’s easy for an admin to go into a cache and change things, you then have decoupled the URIs (services) from their metadata; if the services change, it isn’t obvious that some cache configuration somewhere may have to change as well. Additionally, if you have multiple clients caching your data, you then have to go out and remember where all of them are (chances are, you’ll miss one), and configure each. Not good practice.

Status

Both of these extensions are documented and, in my mind, pretty stable; the I-D’s have expired, but AFAICT all I need to do is double-check things, re-submit them and request publication (as Informational RFCs). I’m going to wait a little while to see if anybody has some feedback that I can incorporate.

We also have implementation of both in Squid, coded by Henrik. Currently, there’s a changeset sitting on 2.HEAD, but hopefully it’ll get incorporated in 2.7. Note that that changeset doesn’t have support for the Cache-Control extensions, but only for the squid.conf directives for controlling these mechanisms; when the drafts start progressing, that should change.

The intent here is to make these features available to anyone who wants them; we don’t want to maintain private Squid extensions, and Squid isn’t the only interesting cache in the world. Enjoy, thanks again to Henrik and Yahoo!, and again I’d love any feedback you have.

16 Comments

Asbjørn Ulsberg said:

Wednesday, December 12 2007 at 9:06 AM

duryodhan said:

Wednesday, December 12 2007 at 9:59 AM

Mark Nottingham said:

Thursday, December 13 2007 at 6:23 AM

Ian Bicking said:

Thursday, December 13 2007 at 8:32 AM

Kevin Burton said:

Thursday, December 13 2007 at 9:28 AM

Mark Nottingham said:

Thursday, December 13 2007 at 10:31 AM

Henrik said:

Thursday, December 13 2007 at 11:57 AM

l.m.orchard said:

Friday, December 14 2007 at 6:06 AM

Mark Nottingham said:

Friday, December 14 2007 at 8:16 AM

David Powell said:

Friday, December 14 2007 at 12:19 PM

Ben Drees said:

Tuesday, December 18 2007 at 3:24 AM

Scott said:

Tuesday, July 22 2008 at 5:31 AM

Mark Nottingham said:

Tuesday, July 22 2008 at 8:18 AM

Hoop Somuah said:

Wednesday, July 23 2008 at 3:13 AM

Hoop Somuah said:

Wednesday, July 23 2008 at 8:01 AM

Mark Nottingham said:

Wednesday, July 23 2008 at 9:35 AM